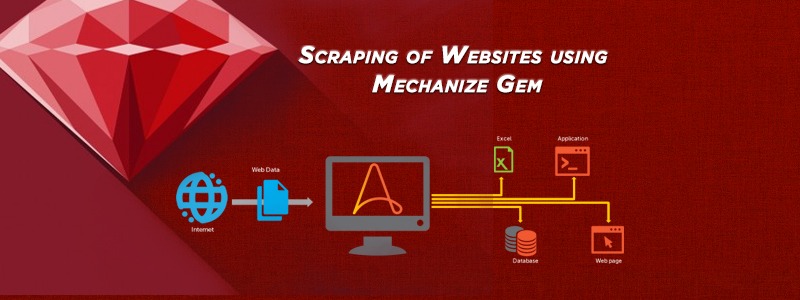

Web scraping (web harvesting or web data extraction) is a computer software technique of extracting information from websites. The Mechanize library is used for automating interaction with websites. Mechanize gem automatically stores and sends cookies, follows redirects, and can follow links and submit forms.

Form fields can be populated and submitted. It also keeps track of the sites that you have visited as a history. It leverages Nokogiri to parse a page for the relevant forms and buttons and provides a simplified interface for manipulating a webform.

-

ruby 1.8.7, 1.9.2, or 1.9.3

-

Nokogiri

Getting started with Mechanize:

Let’s Fetch a Page!

First thing is first. Make sure that you’ve required mechanize and that you instantiate a new mechanize object:

require 'rubygems' require 'mechanize' agent = Mechanize.new Now we’ll use the agent we’ve created to fetch a page. Let’s fetch google with our mechanize agent: page = agent.get ('http://google.com/') Finding Links

Mechanize returns a page object whenever you get a page, post, or submit a form. When a page is fetched, the agent will parse the page and put a list of links on the page object.

Now that we’ve fetched google’s homepage, lets try listing all of the links:

page.links.each do |link| puts link.text end

We can list the links, but Mechanize gives a few shortcuts to help us find a link to click on. Lets say we wanted to click the link whose text is ‘News’. Normally, we would have to do this:

page = agent.page.links.find { |l| l.text == 'News' }.click (or) page = agent.page.link_with(:text => 'News').click (or) agent.page.links_with(:text => 'News')[1].click (or) page.link_with(:href => '/something'). Filling Out Forms

Lets continue with our google example. Here’s the code we have so far:

require 'rubygems' require 'mechanize' agent = Mechanize.new page = agent.get('http://google.com/')

If we pretty print the page, we can see that there is one form named ‘f’, that has a couple buttons and a few fields:

pp page

Now that we know the name of the form, lets fetch it off the page:

google_form = page.form('f')

Lets take a look at the code all together:

require 'rubygems' require 'mechanize' agent = Mechanize.new page = agent.get('http://google.com/') google_form = page.form('f') google_form.q = 'ruby mechanize' page = agent.submit(google_form) pp page Scraping Data Mechanize uses Nokogiri to parse HTML. What does this mean for you? You can treat a mechanize page like an nokogiri object. After you have used Mechanize to navigate to the page that you need to scrape, then scrape it using nokogiri methods: agent.get('http://someurl.com/').search("p.posted")

The expression given to Mechanize::Page#search may be a CSS expression or an XPath expression:

agent.get('http://someurl.com/').search(".//p[@class='posted']")

The Mechanize library is used for automating interaction with websites. Mechanize gem automatically stores and sends cookies, follows redirects, and can follow links and submit forms. Form fields can be populated and submitted. It also keeps track of the sites that you have visited as a history. leverages Nokogiri to parse a page for the relevant forms and buttons and provides a simplified interface for manipulating a webform.

RailsCarma has been working on Ruby on Rails framework from its nascent stage and has handled over 250 RoR projects. With a team of over 100+ RoR developers well-versed with latest techniques and tools, RailsCarma is well suited to help you with all your development needs. We are happy to happy to help you with your queries. Use our Contact Us page to connect to us. Read Related articles :- An Introduction to Rails API

- Poodle SSL Security Threat Explored

- A Simple Way to Increase the Performance of Your Rails App

- Scaling Application with Multiple Database Connection